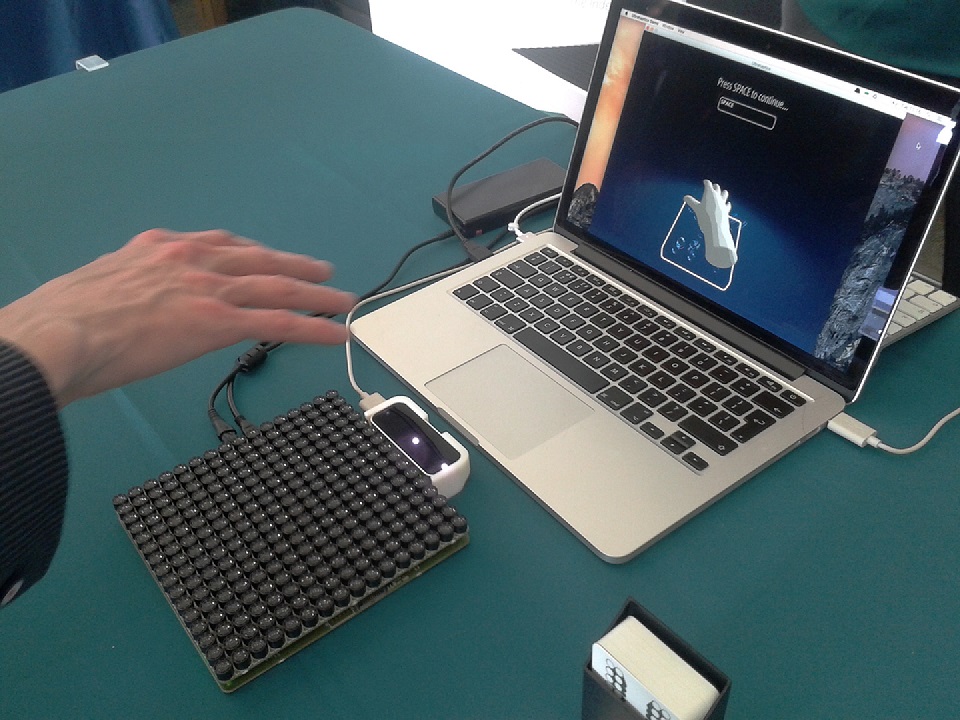

The South West VR expo took place recently and many exhibitors descended on the UK city of Bristol to show off their latest demos and projects, as many believe VR is about to be unleashed onto the world in a big way. One of the exhibitors was Ultrahaptics, new tech startup who work with ultrasonic waves to make you quite literally feel something that isn’t there. The tech demo that was shown ran through a variety of different virtual setups, and when you place your hand above the device, it really works.

The demos included a “force field” that you can feel, virtual bubbles floating into your hand, and a Breakout-style minigame where your hand was the paddle.

After CTO of Ultrahaptics Tom Carter gave a presentation about the work they’re doing, VGU sat down with him to talk more about the technology and its applications in gaming.

VGU: As you were saying [in the presentation], “you’ve got audio and visual, but where’s tactile?” Did you start out saying “how do we do tactile” or did you arrive at it from elsewhere?

Tom Carter: It wasn’t quite that direction, actually, but we came across that thought pretty quickly. I actually started work on what became Ultrahaptics during my undergrad degree, so we had to do a research project, so we gotta find a supervisor…So I did Computer Science so I just spent three and a half years sat around doing computer programming, so I thought I’d do something that has not just the programming but something else as well, and I was really quite into human-computer interaction, different ways of controlling computers, and I found a professor [at the department], and he had this crazy idea that you could feel things using ultrasound, which I thought was…basically like, crazy…but crazy’s good, it makes it interesting.

VGU: Well, it does work, I had a chance to go on it, and it generates a 3D model of your hand when you put it over the device…

TC: We don’t actually do the hand-sensing, as such, we’re just doing the feedback…but, you put your virtual reality goggles on and if you have no arms [in the game], then you raise your hands and they’re not there, so you’ve lost your arms, which is bad. So you then track where the hands are, and you can introduce a hand on the scene, and Leap Motion is doing a really good job of that at the moment, I think…you can get a little adapter for the Oculus so you can see your hands.

TC: So you can see your hands, which is great, but as soon as you use them, there’s no feeling. So that’s the next barrier that then breaks down.

VGU: So that little device that you had, that’s obviously a working prototype. Is that literally just to demonstrate the technology or do you see that becoming you could sell?

TC: So that’s not a commercial product, it’s our evaluation platform. So we’re using that for internal development, that’s what we play with in the office everyday, but also for engaging with other customers who may want to invest [and put] our technology into their devices, so that they can get hands-on with it and have their own version in the office and start prototyping and start working out what they can do with it, what are the possibilities, etc.

VGU: What application do you see in gaming? Obviously there’s the idea that you could pick up objects, but if you’re sat at a desk with an Oculus on, would you have one of these [Ultrahaptics devices] on the desk?

TC: Yeah, I think there’s a lot of different possibilities.

This artist’s impression demonstrates what an augmented reality sphere would be like

VGU: Do you think you’d be able to have a controller and the haptic feedback device?

TC: Yes, you could have a controller, be it keyboard and mouse, or handheld, where you can use that for direction and movement, then take your hands off of that and then reach out and interact with the virtual world. It would again kind of look weird if you look down and there’s nothing in your hands. I think the best way is certainly to interact without a controller, so motion tracking in VR, because it helps stop the break in immersion. And a lot of the VR demos at the moment are stationary, because you still have cables coming out of the devices, you still have camera systems that are looking in a particular direction – if you turn around then they can’t see you anymore – you still have coffee tables that you could hit your leg on. So they tend to be fairly static so you can have a static device that provides the feedback. But in the future I also think it’s going to be possible to embed the feedback device onto you.

VGU: So it would become a wearable?

TC: Into the actual goggles or onto your person so you’d get the feedback that way. That’d pretty cool.

VGU: So, is there any sort of, idea or plan in place to implement a controller-less haptic system into a first-person game? Is that the next step?

TC: Not quite yet. We’ll get there, but not quite yet. We’ve got a bunch a demos that we’ve made,which are what I call an introduction to the technology. They take you through, from super-simple so that you can’t possibly get it wrong, through more and more useful steps until you’ve got something that’s kind of useful. We’ve not made any VR demos, and that’s the next thing that we want to do.

VGU: So the next thing is to try and integrate the technology into some sort of game?

TC: A VR demo, yes, exactly.

VGU: Specifically VR?

TC: Could be AR [Augmented Reality], but I would like some sort of headset where you can see virtual objects and you can reach out and touch them.

VGU: Do you see any non-VR, non-AR application for this?

TC: Yeah, absolutely. In gaming, there’s the Kinect-style idea where the content’s still on the screen, but having some kind of tactile feedback when you’re interacting with the game would be really useful. The real breakdown of the Kinect, if you watch somebody using it, when they’re playing the games it’s fantastic – you can leap, you can jump, you can flail your arms around, and that’s brilliant. And then you have the menu screen and you’ve got two buttons, and pressing the buttons is really awkward, so you have to have these really big gestural movements in order to press them, whereas if you could hold your hand out and feel the button on your hand – “here’s two buttons, and you can press them like this” – rather then making these massive movements, that’s a big help there as well.

VGU: OK, well, I think that’s great. Thank you very much for talking to me.

TC: Cool, thanks.

Ultrahaptics is planning on collaborating with developers to create a really convincing demo that will hopefully get more people on board with their system. During his talk, Tom also mentioned that they’re currently working on creating “shapes” using the ultrasonic waves, such as cubes, spheres and pyramids. It will be interesting to see how the actual device is integrated, and the future looks promising for this potentially revolutionary control method.

There were a ton of VR demos and exhibits shown at SWVR 2015, so stay tuned for more previews in the coming days here on VGU.